Make Better API Calls Than ChatGPT (GPT-4) with Gorilla

A new approach to fine-tuning large language models to make better plugin calls

Large Language Models (LLMs) have become a force to be reckoned with. They excel in tasks like logical reasoning, mathematical reasoning, and program synthesis. However, there's one area where even the most advanced LLMs like GPT-4 falter - making effective use of tools via API calls. This is a significant challenge due to their inability to generate accurate input arguments and their tendency to hallucinate the wrong usage of API calls. Enter Gorilla, a model that's changing the game.

The Gorilla Model

Gorilla is a fine-tuned LLaMA-based model (remember Meta?). It's not just any model; it surpasses the performance of GPT-4 in writing API calls. But that's not all. One of the biggest issues with LLMs is their tendency to hallucinate while making API calls when prompted directly. Gorilla substantially mitigates this issue, making it a game-changer in the field.

Methodology

So, how does Gorilla achieve this? The secret lies in its unique methodology. Gorilla is built using self-instruct fine-tuning and retrieval, enabling it to accurately select from a large, overlapping, and changing set of tools expressed using their APIs and API documentation.

To fine-tune Gorilla, the researchers constructed APIBench, a large collection of APIs with complex and often overlapping functionality. This dataset was created by scraping ML APIs from public model hubs, primarily consisting of TorchHub, TensorHub, and HuggingFace. Gorilla was then fine-tuned with document retrieval using the APIBench dataset, setting the stage for its impressive performance.

Evaluation Results

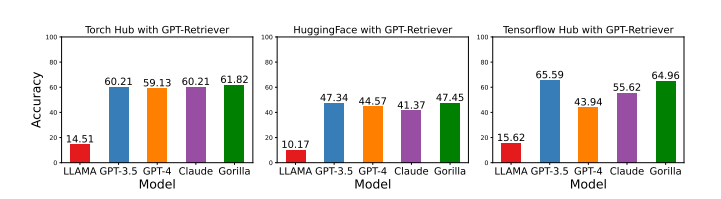

Gorilla's evaluation results are impressive, especially when compared to other models like LLAMA, GPT-3.5, GPT-4, and Claude. In fine-tuning without retrieval, Gorilla achieved state-of-the-art performance in a zero-shot setting, showing a 20.43% improvement over GPT-4 and 10.75% better than ChatGPT. When compared to open-source models like LLAMA, the improvement was as big as 83%.

Retrievers, which help language models find relevant information from large datasets, played a crucial role in these evaluations. The researchers incorporated a ground truth retriever, a perfect search engine that always finds the right information, in the fine-tuning pipeline. With this retriever, Gorilla showed a 12.37% improvement in Torch Hub and a 23.46% improvement in HuggingFace compared to training without a retriever.

However, there's still room for improvement. Current retrievers aren't as accurate as the ground truth retriever. For instance, using the GPT-Index retriever resulted in a 29.20% accuracy degradation, and using the BM25 retriever led to a 52.27% accuracy degradation.

The potential is clear - with a better retriever, fine-tuning with a retriever could be the way forward. Improved retrievers can help language models like Gorilla find the right information more accurately and quickly, leading to even more impressive results in the future.

Adapting To Changes in APIs

One of the most impressive features of Gorilla is its adaptability. The rapidly evolving nature of API documentation presents a significant challenge for LLMs. These documents are often updated at a frequency that outpaces the re-training or fine-tuning schedule of LLMs. However, Gorilla's retriever-aware training allows it to adapt to changes in API documentation readily.

For instance, Gorilla can effectively react to changes in APIs, such as upgrading the FCN’s ResNet-50 backbone to ResNet-101. It can also adjust to shifts in API sources, vital as organizations may change their preferred model registries over time. This adaptability ensures that Gorilla remains relevant and accurate, providing reliable outputs irrespective of changes in the underlying documentation.

Conclusion

Gorilla is a game-changer in the world of LLMs and API calls. It generates reliable API calls to ML models with significantly less hallucination, demonstrates an impressive capability to adapt to test-time API usage changes, and can satisfy constraints while picking APIs. In a world where APIs function as a universal language enabling diverse systems to communicate effectively, Gorilla's correct usage can boost the ability of LLMs to interact with tools in the wider world.

Read the paper here and run the code for yourself here.

The future looks bright for LLMs and API calls. With models like Gorilla leading the way, we can expect further advancements in this field. The potential applications are vast, and as technology continues to evolve, the practical utility of models like Gorilla will only increase. The future is here, and it's powered by Gorilla.